Small Language Models Outperform Large Models for Enterprises Use

Enterprises prefer SLMs over LLMs for targeted accuracy, cost-efficiency, privacy control, and domain-specific reliability, enabling better outcomes with less infrastructure and enhanced data governance.

LLMTECHNOLOGYTECH INFRASTRUCTURE

Eric Sanders

7/14/20254 min read

Small Models, Big Wins: Why Enterprises Are Choosing SLMs Over LLMs

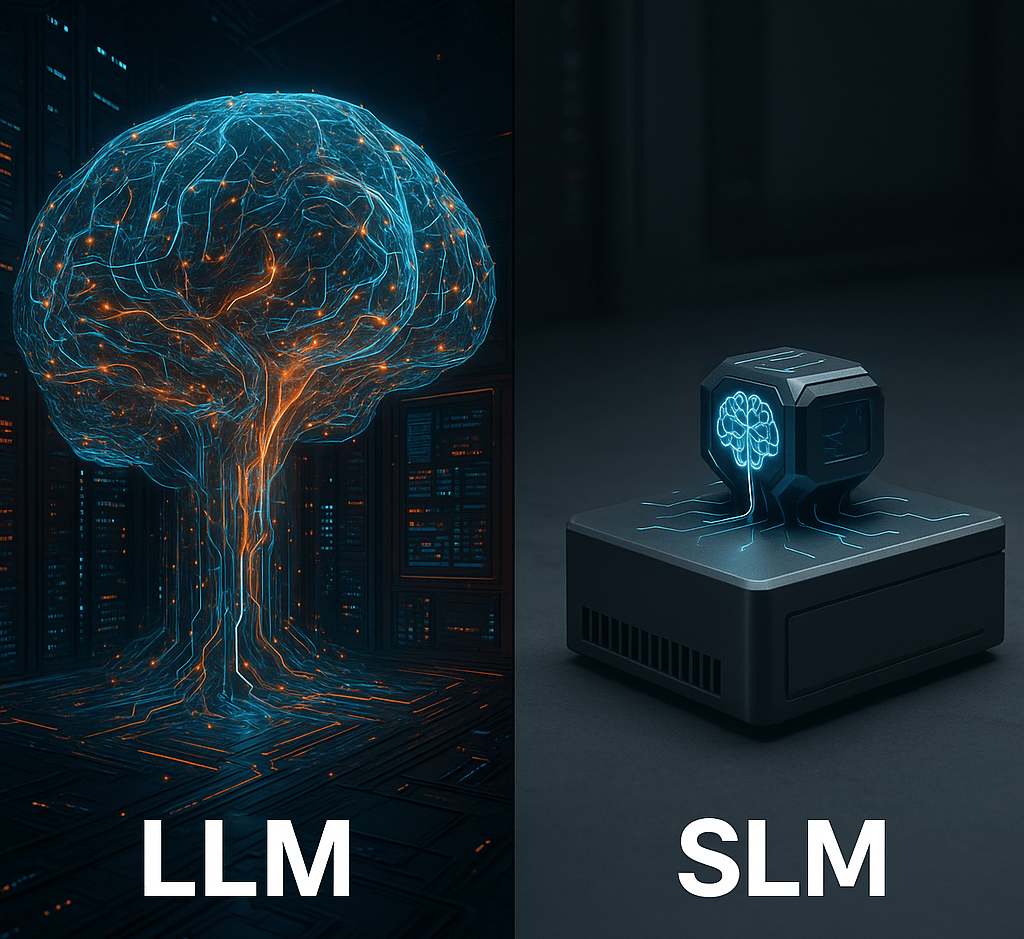

There has been a relentless march of artificial intelligence, large language models (LLMs) have captured headlines and imaginations alike. Here’s a truth I've often overlooked though: bigger isn’t always better enterprise settings, especially when it comes to enterprise AI deployments. More and more, companies are turning to small language models (SLMs), and for very practical reasons I think. Enterprises prefer SLMs over LLMs for targeted accuracy, cost-efficiency, privacy control, and domain-specific reliability. It’s a choice that’s reshaping AI adoption, making it smarter and more sustainable.

Why Size Doesn’t Always Equal Success

LLMs like GPT-4 and others have proven capable of amazing feats likes chatting, writing, coding, and more. Yet their prowess often comes at a very steep price that can be tough for companies to accept: massive computational resources, significant latency, high operational costs, and tricky data privacy concerns. Enterprises that seek precision, efficiency, and control are finding these attributes less than ideal. Small language models, or SLMs, emerge as the no-nonsense alternative. These are AI models designed with specific tasks, narrower knowledge domains, and constrained computational needs in mind to reduce cost, increase speed and safety. Rather than chasing the ideals of universal intelligence SLMs show they can excel at what companies need most which is practical, relevant, and consistent performance.

To understand why enterprises are increasingly favoring SLMs, I've been considering the following key factors:

Targeted Accuracy and Domain Specialization

An enterprise doesn’t always need a model that knows everything, it needs one that knows its everything. This means understanding specialized terminologies, industry regulations, customer nuances, and operational peculiarities specific to a business.

- Focused training data: SLMs can be trained on proprietary datasets and niche knowledge bases that enhance understanding within a specific domain.

- Less noise, more relevance: By avoiding the overwhelming breadth of general-purpose LLMs, SLMs minimize irrelevant or nonsensical outputs.

As one industry expert put it, “SLMs deliver more reliable insights because they are built around the unique context and requirements of the enterprise.” There context-aware approach is leading to higher accuracy, directly impacting business outcomes, whether it’s in finance, healthcare, manufacturing, or any other sector.

Cost-Efficiency Without Sacrificing Performance

Running LLMs has proved to be so resource-intensive. The sheer size of many of the larger models demands pricey GPU clusters, enormous memory, and substantial energy consumption and it all adds up to a hefty operational bill. Enterprises, however, operate under budget constraints and often need sustainable solutions. SLMs shine here by requiring:

- Smaller computational footprints: less infrastructure, less power usage, less maintenance.

- Lower latency: faster responses with less hardware overhead.

- Easier scalability: companies can deploy multiple SLM instances across various departments without breaking the bank.

For organizations, this provides them an efficient shift to savings and flexibility, making AI adoption feasible beyond headline-grabbing tech labs.

Reinforcing Privacy and Governance

Data privacy is non-negotiable for enterprises managing sensitive information. Using massive cloud-hosted LLMs often means ceding data exposure to third-party providers, risking compliance violations under regulations like GDPR, HIPAA, or CCPA.

SLMs frequently offer more control:

- On-premises deployment: keeping data strictly within firewalls.

- Selective access and auditing: easier to monitor and manage data flows.

- Customizable governance rules: tailoring AI behavior to meet regulatory demands.

This enhanced data governance capability is why many risk-averse enterprises shy away from the broad openness of LLM platforms. SLMs provide a safer AI foundation that fosters trust internally and with customers.

Lean Infrastructure, Faster Integration

Finally, enterprises need AI solutions that integrate smoothly into existing workflows without requiring massive overhauls.

- SLMs can be fine-tuned faster and cheaper on existing infrastructure.

- They enhance current systems rather than replacing them.

- Their relative simplicity accelerates deployment cycles and reduces dependency on scarce AI specialists.

This adaptability helps businesses move from experimentation to production faster, delivering tangible value without the typical bottlenecks associated with scaling up LLMs.

A Shift Toward Smaller Language Models

What can we glean from this growing preference for SLMs? Well, the enterprise AI journey is not always about blindly pursuing the biggest, flashiest models. Instead, I'm starting to see it being about making intelligent trade-offs that serve strategic goals:

- Precision over breadth: Narrow models aligned with business domains outperform broad-brush giants in accuracy and relevance.

- Efficiency over extravagance: Streamlined models that cost less and run faster boost feasibility and user adoption.

- Control over convenience: Maintaining tight data privacy and regulatory compliance is a competitive advantage, not negotiable.

- Integration over isolation: AI must be embedded into the fabric of operations in a manageable way.

What Does This Mean for the Future of AI in Business?

In an era where we continue to see massive and hyper accelerated time lines in technology advancements “AI hype” often overshadows sober strategy, enterprises choosing SLMs remind us that technology decisions are deeply human, and can be manipulated to our needs.

- Who benefits? Companies and employees who need AI to work for them, not complicate or control them.

- What’s at stake? The ability to innovate responsibly without sacrificing privacy or breaking budgets.

- How will this reshape the workforce? By making AI accessible and dependable, SLMs empower workers rather than replace them.

- When will this trend redefine AI standards? Likely sooner than the next wave of giant model announcements.

At its core, the shift we are seeing from LLMs to SLMs is about aligning tech with people’s and enterprise needs and values. It’s about taking control back and demanding solutions that make sense, not just headlines. As enterprises continue navigating this complex landscape, the question remains:

How will your organization balance AI ambition with pragmatic constraints to create meaningful, trustworthy outcomes?

Finding the answer is will not be a technical challenge I think. It’s a human imperative to ensure we are utilizing the tools with the best possible outcome that ensures accuracy and safety.

Efficiency

Transform your workflows and reclaim your time.

Contact Us

Need A Custom Solutions? Lets connect!

eric.sanders@thedigiadvantagepro.com

772-228-1085

© 2025. All rights reserved.