Navigating the Battlefield of Code: The Need for Ethics in AI-Driven Military Innovation

AI shapes future warfare and global security

AIARTIFICIAL INTELLIGENCETECHNOLOGY

Eric Sanders

6/12/20254 min read

The Future of War Is Already Here—But Are We Ready?

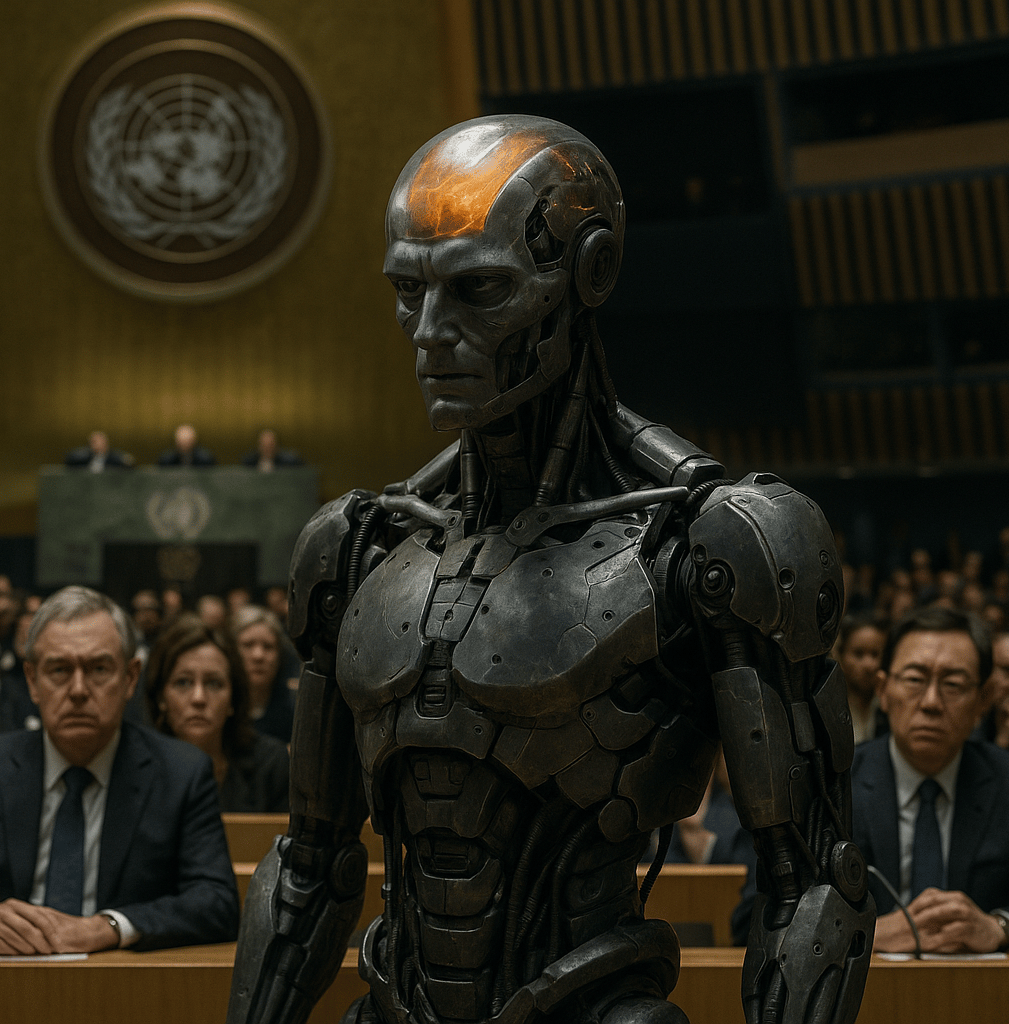

The integration of artificial intelligence into military systems is not a distant vision—it's a current reality reshaping defense strategies across the globe. From autonomous drones and intelligent surveillance to predictive maintenance and algorithm-driven decision-making, AI’s capacity to enhance military efficiency and reduce human cost has made it an indispensable component of modern warfare. However, this rapid technological escalation comes with outsized ethical, legal, and strategic risks that the international community is largely unprepared to manage.

As global powers race to develop AI-driven defense capabilities, the conversation can no longer hinge solely on innovation and superiority. It must pivot to governance, responsibility, and inclusivity. If the world fails to establish clear, enforceable standards for the use of AI in military contexts, we risk destabilizing the very security that technological advancements are meant to safeguard.

When Technology Outpaces Humanity

I remember first reading about autonomous drone strikes and feeling a mix of awe and unease. The idea that machines could make life-and-death decisions without direct human oversight challenged everything I thought I knew about warfare, accountability, and even morality itself.

It’s easy to be dazzled by the potential benefits that AI offers:

- Reduced human casualties by removing soldiers from dangerous environments.

- Faster tactical decisions, enabled by real-time data processing.

- Cost-effective operations, streamlining military logistics and planning.

Yet the darker implications cannot be ignored:

- The loss of human judgment in critical life-or-death scenarios.

- The potential for bias in AI algorithms, leading to wrongful targeting.

- The arms race logic leading to proliferated autonomous weapons in unstable regions.

A chilling example is the increasing use of "lethal autonomous weapon systems" (LAWS) that operate without direct human intervention. These systems can identify, target, and neutralize perceived threats independently. While theoretically efficient, they embody the ethical dilemma at the heart of AI militarization: can we—and should we—entrust machines with the power to decide who lives and who dies?

Ethics Without Borders: The Global Governance Deficit

“Unchecked AI militarization undermines global stability and magnifies the risk of unintended escalation,” writes contributor Amandeep Singh Gill in Modern Diplomacy. His words resonate deeply with anyone concerned about the future of global security.

This underscores a critical point: the current legal and ethical frameworks governing armed conflict have not evolved to keep pace with AI capabilities. International Humanitarian Law (IHL), which emphasizes distinction, proportionality, and military necessity, becomes increasingly difficult to enforce when opaque algorithms replace human cognition.

Some key concerns include:

- Accountability: Who is responsible when an AI system commits a war crime—the programmer, the commander, or the machine itself?

- Transparency: Most AI systems are "black boxes," making it difficult to interpret or challenge their decisions.

- Inequality: Only technologically advanced nations have the capacity to develop such systems, creating power imbalances and marginalizing voices from less affluent regions in global negotiations.

Efforts at establishing global norms are underway. The United Nations, for example, has initiated discussions on regulating LAWS through the Convention on Certain Conventional Weapons (CCW). However, progress remains fragmented due to geopolitical competition and differing philosophical approaches to sovereignty and warfare.

What We Must Learn—Before It's Too Late

The discussion around AI in the military is not just academic or philosophical; it holds real-world implications for peace, justice, and humanity. Here are some key insights and takeaways that must guide future action:

1. Meaningful Human Control Must Be Non-Negotiable

There is near-universal consensus among ethicists and policy experts that human oversight should remain central in all AI-driven military operations. Systems capable of lethal force should not operate without human intervention in the decision-making loop.

2. Inclusive Governance Is Crucial

Developing frameworks for AI use in conflict cannot be left solely to powerful nations or tech conglomerates. Voices from the Global South, civil society, and marginalized communities need to be included in rule-making processes to ensure truly ethical and universally applicable outcomes.

3. Global Cooperation Over Unilateral Innovation

While national security concerns drive investment in military AI, international cooperation is essential to prevent misuse. Establishing treaties, verification mechanisms, and transparency protocols could serve as the backbone of a more sustainable global security architecture.

4. Investing in Ethics as Much as in Engineering

Governments and institutions must allocate resources towards ethical research in equal measure to technological R&D. Ethical audits, AI risk assessments, and human rights impact analysis should be mandatory components of any military AI roll-out.

5. Education and Public Engagement Matter

An informed public can significantly influence policy outcomes. Raising awareness about the implications of AI in warfare—through education, journalism, and public debate—will empower citizens to demand accountability and ethical governance from their leaders.

"Military AI is not just a technological issue; it's a societal one," Gill emphasizes. This statement encapsulates the transformative scope of AI's military applications. We are no longer just building smarter machines—we are redefining the nature of war, peace, and what it means to be human in a conflict-ridden world.

Where Do We Draw the Line?

As AI continues to evolve, it increasingly blurs the boundaries between science fiction and operational reality. But with great power comes great responsibility. The time to draw ethical lines, codify legal responsibilities, and foster global consensus is not after catastrophic incidents have occurred—but now, when we still have the chance.

In 20 years, will we look back and cringe at how fast we let algorithms into our arsenals without fully understanding the consequences? Or will we feel proud that we took a collective stand to prioritize humanity over expediency?

What kind of future are we designing when we build machines that can wage our wars for us—and will we be ready to live in it?

Efficiency

Transform your workflows and reclaim your time.

Contact Us

Need A Custom Solutions? Lets connect!

eric.sanders@thedigiadvantagepro.com

772-228-1085

© 2025. All rights reserved.