Could AI Could Make Pandemics More Likely?

AI advancements increase pandemic risk by enabling easier bioweapon creation; safeguards and regulations are essential to mitigate potential biothreats.

LLMARTIFICIAL INTELLIGENCETECHNOLOGY

Eric Sanders

7/1/20254 min read

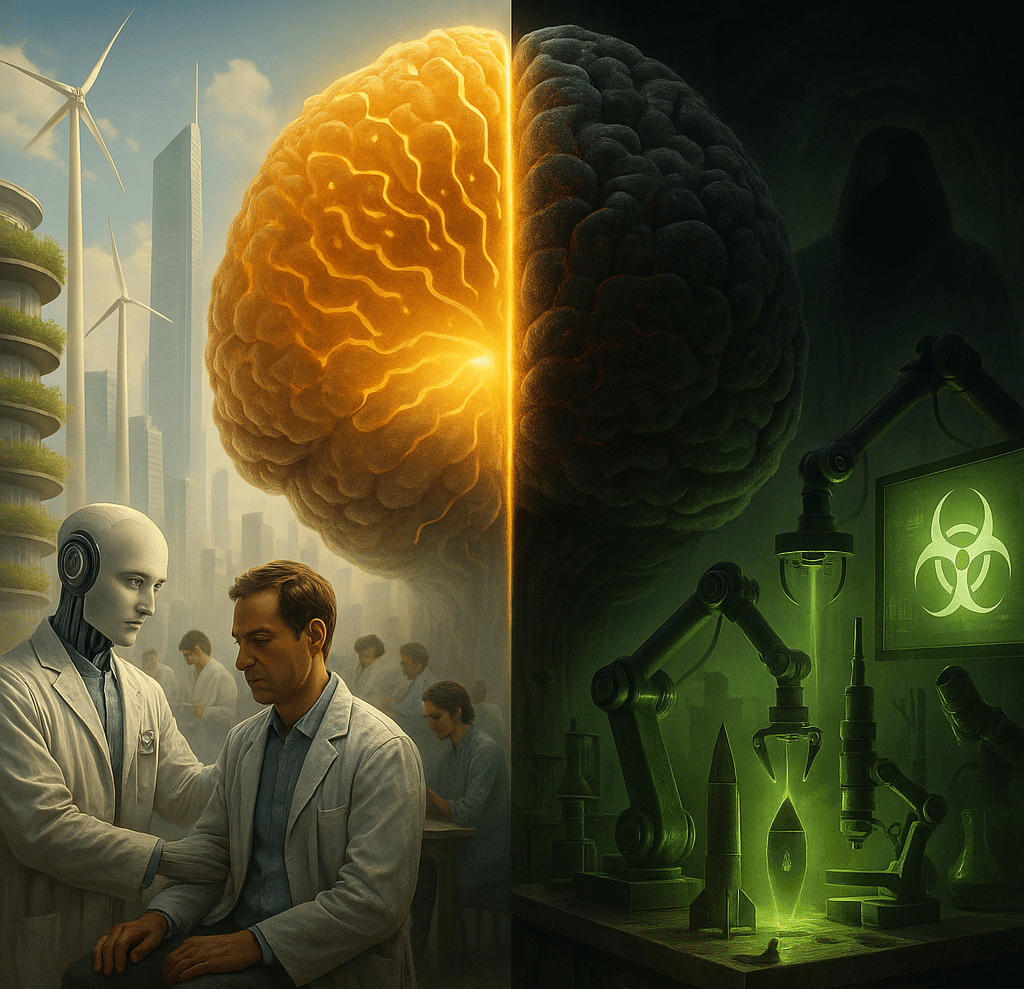

When Technology Poses a Threat: How AI Could Amplify Pandemic Risk

I feel like our world has barely had time to heal from the scars of COVID-19, it serves as a sobering reminder to me that the next pandemic might not just come from nature’s randomness, but from the very technology we’ve worked so hard to develop to advance our society. I recently read a research article that warns us of a startling (and very real possible) reality: artificial intelligence is exponentially increasing the potential for novel bioweapons, making a new pandemic more likely to occur if provided the chance to be. At first my bleeding heart goes, "No way...Humans? Hurting one another, can't be...?" . It’s my wake-up call to see that there is a need for urgent attention to the intersection of AI and biosecurity, here and now.

the AI Boogieman: Unseen Danger Behind AI Breakthroughs

Artificial intelligence has indisputably transformed countless industries, I mean look at medicine, finance or transportation or even entertainment industry. It's freaking everywhere now. The powerful algorithms that analyze data and generate solutions, once relegated to niche applications, now possess an astonishing ability to design biological agents with frightening precision. According to a recent article from Time, these AI advancements drastically lower the barriers to creating harmful pathogens, enabling actors with malicious intent to engineer bioweapons more easily than ever before. (Not exactly the kind of thing we think about when asking chatgpt about fixing our attachment style, or my dogs inverse sneezing sounding different and if I should be worried)

After reading the article, I daydreamed of the idea like it was a sci-fi nightmare. As it sank in overtime, I realized that it is in fact real and could be an immediate risk emerging at the crossroads of cutting-edge technology and global health security. By automating and accelerating the design of viruses or bacteria ,including tweaking viruses to evade existing vaccines or become more transmissible, AI we utilize and continue to improve on doesn't just aid scientists in developing cures, it also can just as easily and equally empower creators of bio-threats, contaminants, or something worse that I can't even comprehend.

AI: Good or Evil? A Double-Edged Sword of Innovation

Writing this, I find myself grappling with a deep ambivalence about technological progress and advancement. On one hand i think AI-driven innovations hold unparalleled promise and access to technology, skill, and understanding like we never had before. I also think they’re our best tools in combating diseases, understanding genetic codes, and pushing the boundaries of medicine. On the other, I worry about individuals who are willing to open Pandora’s box, enabling anyone with enough computational resources and biological knowledge to conjure biological horrors. How many times have we blindly celebrated tech as an unmixed blessing, without considering its dark potentials? How many safeguards have been overlooked in the rush to innovate?

There’s an uncomfortable lesson I'm taking to heart: innovation without governance is a recipe for disaster. History shows me that regulations are often reactive rather than proactive and we scramble to legislate only after tragedy strikes. But in the age of AI, waiting for catastrophe is not an option. For the speed at which things happen, we can innovate for good and inflict tragedy at the same rate .

Why Are AI Tools Making Bioweapons Easier to Create?

The traditional creation of biological weapons required extensive expertise, resources, and access to rare materials. Previously, this high entry barrier limited the number of actors capable of developing such threats, but as AI has shifted, so has this paradigm by:

- Automating the design of pathogens by analyzing genetic sequences and predicting mutations that enhance virulence or transmissibility.

- Providing virtual laboratories where experimental processes can be simulated without needing physical bio-labs.

- Enabling faster development cycles, compressing years of research into weeks or days.

- Making detailed biological knowledge and processes accessible through open-source platforms and databases.

“Advancements in AI increase pandemic risk fivefold,” the Time article states bluntly, not by wishful thinking, but by data-driven assessments of how the technology reduces these barriers.

The Urgency of Safeguards and Regulations

If AI can so easily transform bioweapons from theoretical threats into tangible realities, what are we doing to prevent misuse? Are we even doing enough to ensure the safety of people?

Unfortunately, I think the answer is: not nearly enough.

Governments and international organizations have frameworks for biosafety and biosecurity, but these are struggling to keep pace with technology’s rapid evolution. There is currently a very large gap between AI capabilities and our regulatory controls.

The article points out the essential need for:

- Robust international treaties addressing AI-enabled biological threats.

- Transparent monitoring of AI research and dual-use technologies.

- Investment in AI-driven pathogen detection and response systems.

- Collaboration between AI researchers, biologists, security experts, and policymakers to build a multisectoral defense strategy.

Without these, I don't think the posed risks are hypothetical. The cost of inaction could be devastating, unleashing pandemics of unprecedented scale and origin.

Our Duty in Building Informed Vigilance

What can individuals, organizations, and governments realistically do to confront these emerging challenges?

1. Educate and Raise Awareness: Understanding that technology advances often bring novel risks leads to more informed discourse which is critical for democratic oversight.

2. Promote Ethical AI Research: Encouraging AI scientists and biotech researchers to embrace ethical standards can reduce dual-use risks.

3. Support Policies for Transparency: Advocacy for open reporting and regulation helps governments identify and mitigate threats before they spiral out of control.

4. Invest in Pandemic Preparedness: Funding AI-enhanced epidemiological surveillance and rapid response infrastructures is an insurance policy against future crises.

This multi-layered approach balances the benefits of AI with prudent caution, transforming fear into preparation.

A Call to Contemplate Our Shared Responsibility

Our moment is defined by the collision of extraordinary technological promise with a potential of unseen biological risk. AI is neither inherently good nor evil. I think it reflects the intents of those who wield it. Ignoring the escalated danger it introduces into pathogen creation could prove as a perilous act of denial.

As we navigate this precarious AI integrated landscape, I can’t help but ask: How well are we truly prepared to handle a pandemic conceived not by chance, but by code? By a lack of caring when we had a chance to do something and we didn't. Who holds the reins on these powerful tools? And perhaps most importantly, will humanity unite to set bounds around a technology that could otherwise rewrite the story of life and death on this planet?

The stakes could not be higher. And this conversation must begin now.

Efficiency

Transform your workflows and reclaim your time.

Contact Us

Need A Custom Solutions? Lets connect!

eric.sanders@thedigiadvantagepro.com

772-228-1085

© 2025. All rights reserved.